Stopthatgirl7

Hey, y’all! Just another random, loudmouthed, opinionated, Southern-fried nerdy American living abroad.

This is my lemmy account because I got sick of how unstable kbin dot social was.

Mastodon: @stopthatgirl7

- 63 Posts

- 16 Comments

7·21 days ago

7·21 days ago…oh my GOD, they are cooked.

4·1 month ago

4·1 month agoRight now, it’s all being funded by one person, Zhang Jingna (a photographer that recently sued and won her case when someone plagiarized her work) but it’s grown so quickly she got hit with a $96K bill for one month.

3·1 month ago

3·1 month agoDeviant Art is also trying to scrape artists’ work for AI. It what allows AI at to be posted and has prompted it.

105·1 month ago

105·1 month agoI am just so, so tired of being constantly inundated with being told to CONSUME.

142·1 month ago

142·1 month agoIf they do, it’s going to be a bad time for them, since Cara has Glaze integration and encourages everyone to use it. https://blog.cara.app/blog/cara-glaze-about

Also, the resale values are…not good.

303·3 months ago

303·3 months agoY’all gotta stop listening to Elon Musk without the world’s largest block of salt.

14·3 months ago

14·3 months agoNot earthquakes this big. There hasn’t been one this size there in 25 years.

33·3 months ago

33·3 months agoYes. When people were in full conspiracy mode on Twitter over Kate Middleton, someone took that grainy pic of her in a car and used AI to “enhance it,” to declare it wasn’t her because her mole was gone. It got so much traction people thought the ai fixed up pic WAS her.

12·3 months ago

12·3 months agoI scroll through to see if things have been posted before. If I don’t see it, I assume it hasn’t. And I use a client so I don’t see if there are cross posts because it doesn’t display them.

9·3 months ago

9·3 months ago?? I didn’t post this anywhere else? Or even see it anywhere else?

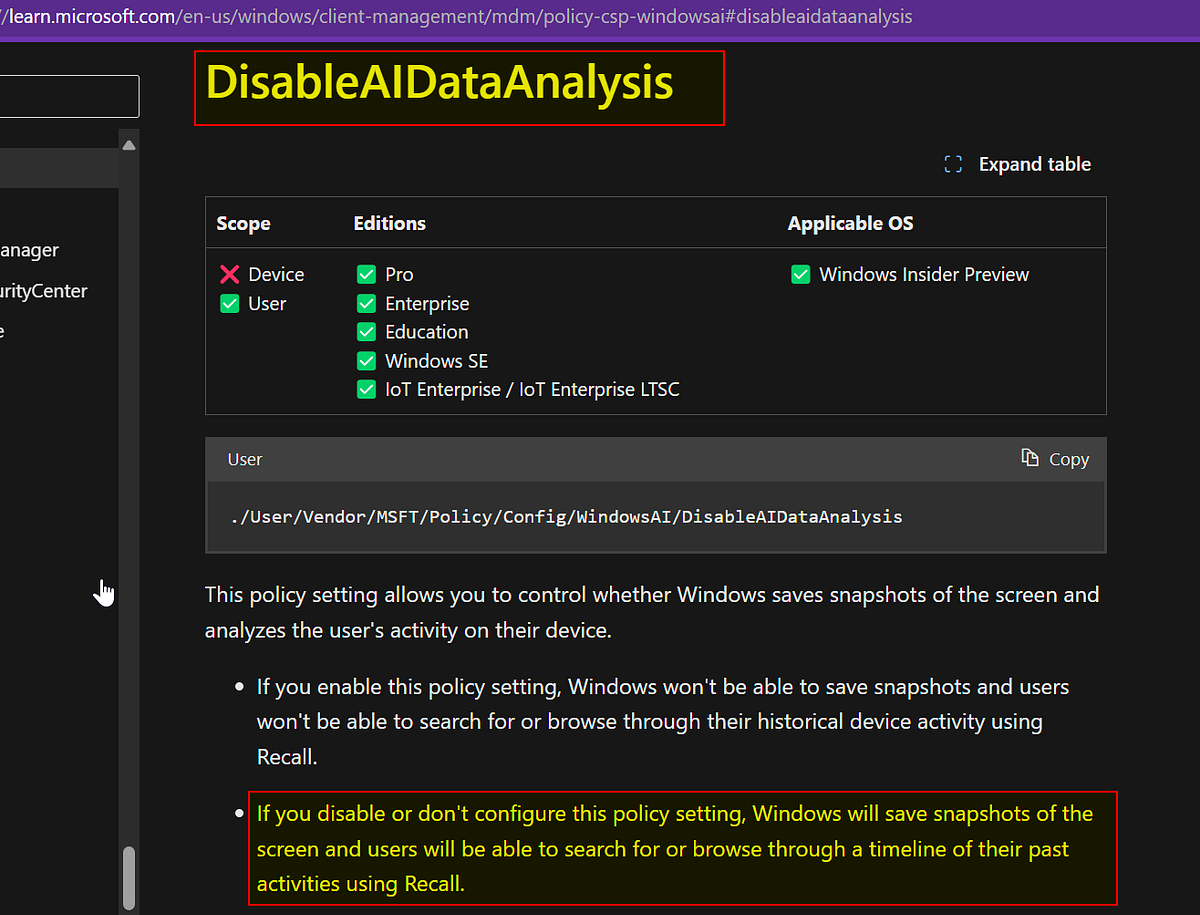

33·4 months ago

33·4 months agoIf only we didn’t live in a dystopia and that was what this was for.

51·4 months ago

51·4 months agoYes. Because the algorithm fills them with tweets from other people. That’s why it seems like there are still the same number of people there even though there aren’t.

9·4 months ago

9·4 months agoBecause the algorithm will always try to fill the timeline with something. It’s not going to show you empty spaces where tweets WOULD have been if people who left had been there.

10·4 months ago

10·4 months agoOnly when it’s theirs.

Someone posted links to some of the AI generated songs, and they are straight up copying. Blatantly so. If a human made them, they would be sued, too.