I’ve seen some people on Twitter complain that their coworkers use ChatGPT to write emails or summarize text. To me this just echoes the complaints made by previous generations against phones and calculators. There’s a lot of vitriol directed at anyone who isn’t staunchly anti AI and dares to use a convenient tool that’s avaliable to them.

Ragdoll X

Three raccoons in a trench coat. I talk politics and furries.

Other socials: https://ragdollx.carrd.co/

- 4 Posts

- 70 Comments

“My science-based, 100% dragon MMO is already under development.”

253·1 month ago

253·1 month agoYeah while I don’t doubt that noise pollution can affect one’s health I have to wonder how much of this is just the placebo effect, like with people complaining that cellphone towers are giving them migranes or rashes.

Oh SatansMaggotyCumFart, your comments never disappoint.

11·1 month ago

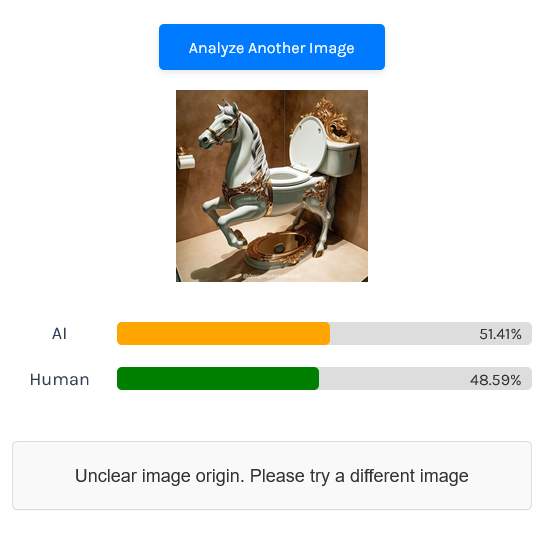

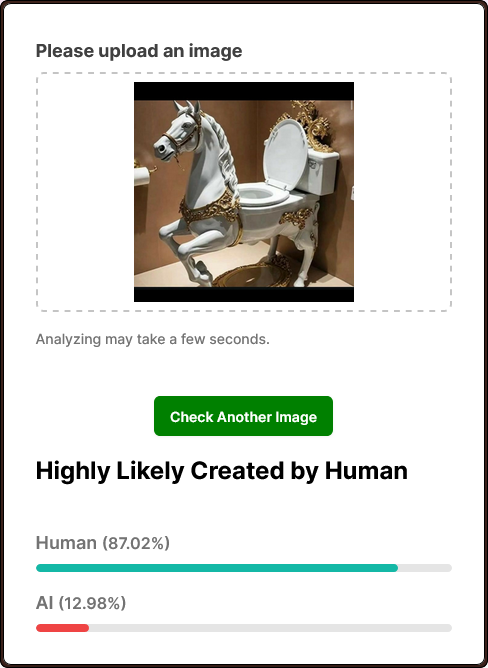

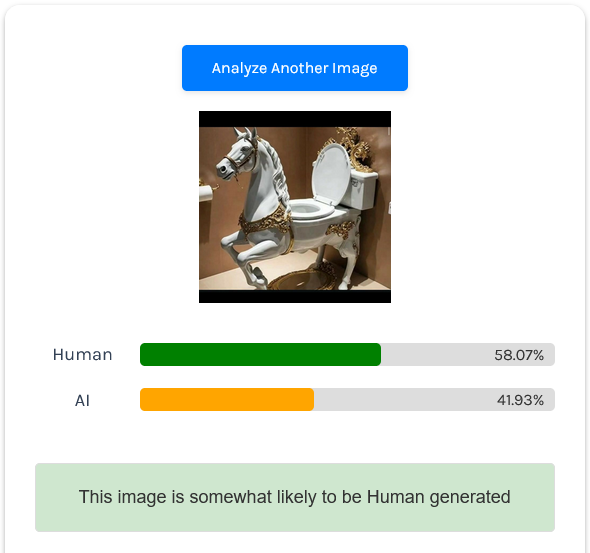

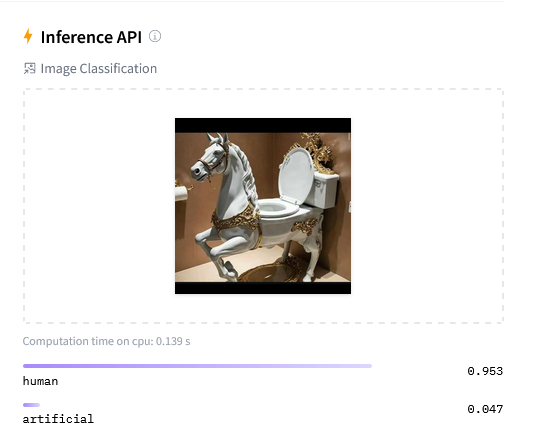

11·1 month agolmao no wonder something looked so off to me, the HD verision makes it even clearer that it’s AI-generated, even more so considering the website it came from.

Interestingly enough even the larger image on the original website fooled most of the AI image detectors, with only one of them (isitai.com) just barely saying that the image is probably AI-generated, while all the others said with >90% confidence that it wasn’t.

I can only speculate that all of these detectors are outdated and were trained with older AI-generated images that were easier to detect.

35·1 month ago

35·1 month agoThis just screams “Gurbanguly Berdimuhamedow” even though apparently it doesn’t actually have anything to do with him lol

And I was really suspicious that this was AI-generated but apparently it’s real? /shrugEdit: no it ain’t lol

11·2 months ago

11·2 months agoDon’t a lot of people also keep their tax information as plain text in their PC? If someone’s really worried about that stuff being leaked I think it’s on them to download VeraCrypt or smth, and also not to use ChatGPT for sensitive stuff knowing that OpenAI and Apple will obviously use it as training data.

921·2 months ago

921·2 months agoMaybe they should try using Claude 3.5 Sonnet to write more secure code for their systems. I’ve heard it’s the best LLM out there when it comes to coding 🤡

Kinda sounds like how worker cooperatives work tbh, but with Gabe still technically being the owner.

I remember reading a news piece a while back about how the founder of a food company made sure to transfer ownership to the employees before leaving. While we’re talking about worst-case scenarios, let’s also hope for the best and hope that Gabe has a similar plan.

181·2 months ago

181·2 months agoNo but you see he is a visionary! A real life Tony Stark!! He’ll do great things with that money like… Making

TwitterX likes private for some reason…? I’m sure that cost a lot of money somehow /s

151·2 months ago

151·2 months agoȈ̶̢̠̳͉̹̫͎̻͔̫̈́͊̑͐̃̄̓̊͘ ̶̨͈̟̤͈̫̖̪̋̾̓̀̓͊̀̈̓̀̕̚̕͘͝Ạ̶̢̻͉̙̤̫̖̦̼̜̙̳̐́̍̉́͒̓̀̆̎̔͋̏̕͝͝M̶̛̛͇̔̀̈̄̀́̃̅̆̈́͑̑͆̇ ̵̢̨͈̭͇̙̲͎͉̝͙̻̌͝I̷̡͓͖̙̩̟̫̝̼̝̪̟̔͑͒͊͑̈́̀̿̋͂̓̋̔͌̚ͅN̸̮̞̟̰̣͙̦̲̥̠͑̔̎͑̇͜͝ ̷̢̛̛͍̞̖̹̮͈͕̠̟̽̔̋̎͋͑̍̿̅̈́̋̕̚̚͜͝Y̴̧̨̨͙̗̩̻̹̦̻͎͇͈͎͓̩̐̓Ö̸͈̭̒̌̀̇͂̃͠ͅŨ̷̢̞̗͛̌͌͒̀̇́̽̓͑͝Ŕ̷͇͌ ̸̛̮̋̏̋̋̔͝W̶͔̄̐͋͑A̷̧̖̗͕̻̳͙̼͖͒L̴̩̰͙̾͑͑͑̒̏Ḻ̸̡̦̭͚̱̝̟̣̤͗̊́͐̋̈́̒͠͠͠͠͝S̸̯͚͈̠͍̆̉̑͗͊̄̒̏͆̔͊

me

I’m just a little silly :3

251·3 months ago

251·3 months agoThis did happen a while back, with researchers finding thousands of hashes of CSAM images in LAION-2B. Still, IIRC it was something like a fraction of a fraction of 1%, and they weren’t actually available in the dataset because they had already been removed from the internet.

You could still make AI CSAM even if you were 100% sure that none of the training images included it since that’s what these models are made for - being able to combine concepts without needing to have seen them before. If you hold the AI’s hand enough with prompt engineering, textual inversion and img2img you can get it to generate pretty much anything. That’s the power and danger of these things.

332·3 months ago

332·3 months agoIIRC it was something like a fraction of a fraction of 1% that was CSAM, with the researchers identifying the images through their hashes but they weren’t actually available in the dataset because they had already been removed from the internet.

Still, you could make AI CSAM even if you were 100% sure that none of the training images included it since that’s what these models are made for - being able to combine concepts without needing to have seen them before. If you hold the AI’s hand enough with prompt engineering, textual inversion and img2img you can get it to generate pretty much anything. That’s the power and danger of these things.

4012·3 months ago

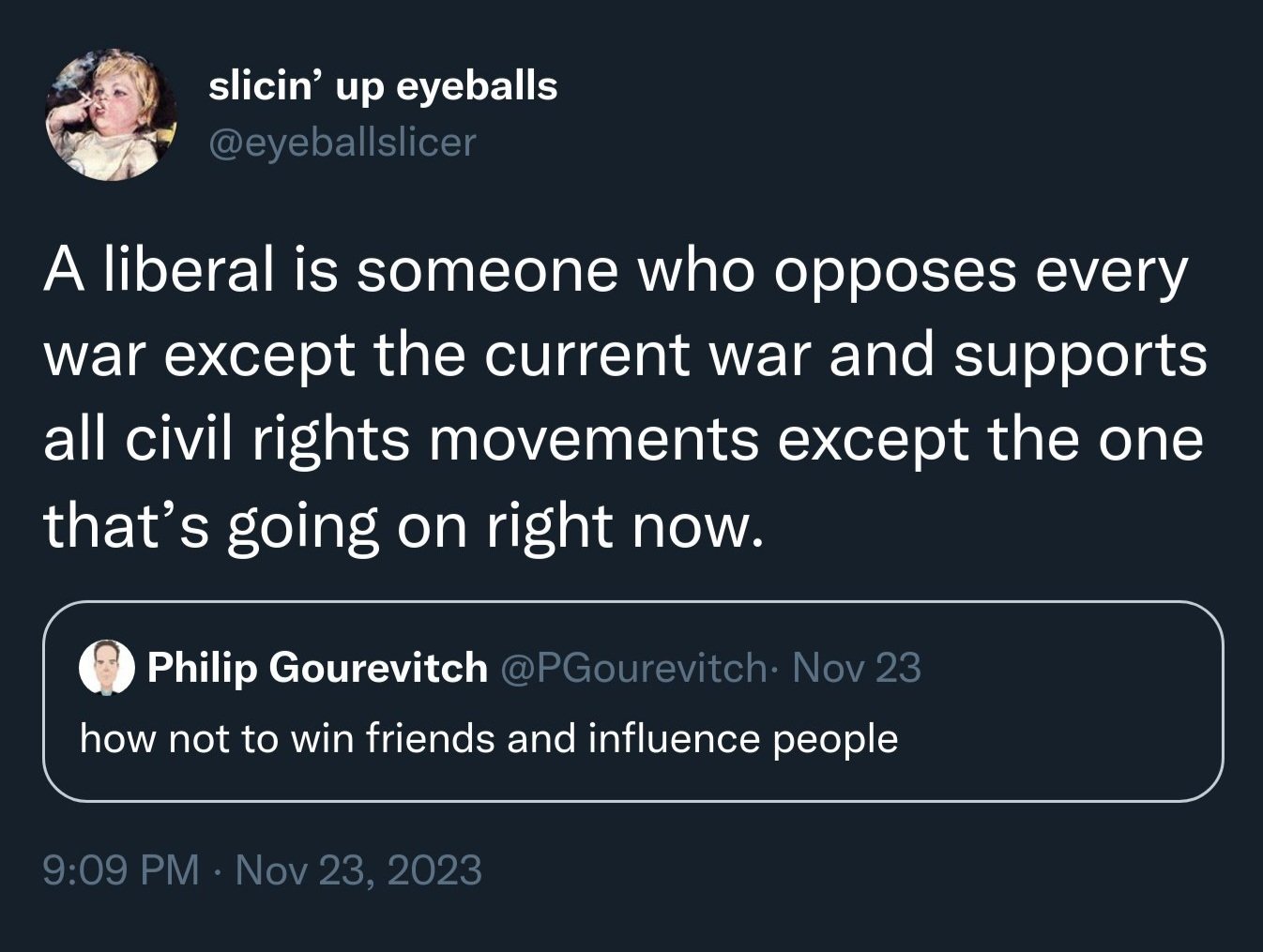

4012·3 months agoFor a more succinct answer:

It’s obviously tongue-in-cheek, but it gets the point across lol

are they also going to roleplay the memes

i sure hope so

That’s exactly the kind of comment I’d expect from a MF called “SatansMaggotyCumFart”

Looked up her name on Twitter to see what people were saying about this, that was a mistake 🙄

A lot of people seem to hate her for whatever reason, she was far from perfect, but all things considered I think she did fine as CEO and I never got the hate. I can’t imagine how hard it must be to manage a company as big and complex as YouTube.